Requirements Analysis Using NLP

One of our automotive customers asked us to analyze requirements similarities to simplify processing of RFPs from OEM car manufacturers. The customer selected 50,000 requirements from several projects and our task was to use Natural Language Processing (NLP) and deep learning networks to identify semantically similar requirements. See what we have found during this interesting job.

Task Objectives

- Find semantically similar requirements

- Categorize requirements according to similarity level

- Review the results in ReqView

What is Semantic Similarity

The semantic similarity describes how much two different documents overlap in their meaning. This contrasts with the traditional full-text comparison that just evaluate whether the documents contain a common subset of equal words, the size of which corresponds to the level of their similarity.

Credit: the example sentences were picked from the SentenceTransformers Documentation and Advances in Semantic Textual Similarity blog article and were processed with our pipeline.

Note: Full-Text similarity wrongly identifies sentences "How old are you?" and "How are you?" with common words as similar, thought the meaning is totally different. In contrast, semantic similarity correctly identifies that sentences both sequences "How old are you?" and "What is your age?" are similar, though they do not have a single word in common. Also, note that the semantic similarity could be evaluated across different languages at no additional cost.

How We Solved Requirements Similarity

The text descriptions of each requirement (including description of parent requirements in the document hierarchy) were concatenated into a single chunk of text. This text was then fed into the sentence/document embedding model, such as the Universal Sentence Encoder (USE) or, Sentence BERT, which represents each requirement as a vector in the multi-dimensional Euclidean space with, e.g., 512 dimensions in the case of USE.

The embedding space has such property, that texts with similar meaning have similar representation, i.e., are mapped to similar vectors. The similarity measure of two requirements can be then evaluated by comparing their embedding vectors, e.g., by measuring the angle between the vectors. It could be even used to compare texts written in different languages if the multilingual embedding model is used. Such a model maps similar texts to similar embedding vectors regardless of the language the text is written in.

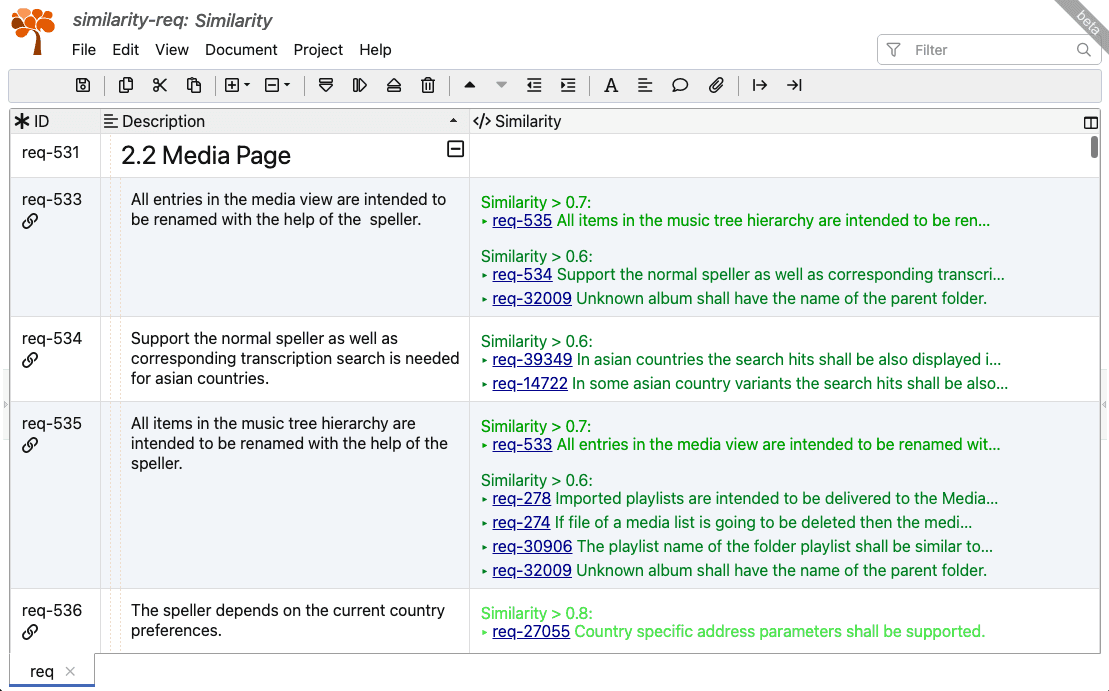

How We Displayed Requirements Similarity in ReqView

We represented relations between similar requirements as traceability links grouped by similarity level. On top of that, we mapped similarity levels to colors to further improve the clarity of the output.

We extended ReqView table view by custom traceability column Similarity, which displays suggestions of similar requirements:

We were surprised by the usability of the similarity search in ReqView. Sometimes, we had fun with obvious misinterpretations and unexpected relations found by the algorithm :-)

Potential Benefits

- Achieve higher quality of the requirements specification

- Detect missing traceability links between requirements with similar description

- Identify overlapping requirements

- Save time by

- Smart import of requirements

- Reuse of similar requirements